Monitoring Online Discourse During An Election: A 5-Part Series

Using AI to track disinformation during an election campaign.

How can online disinformation be identified and tracked? KI Design provided social media monitoring solutions for the 2019 Canadian federal election.[1] KI Social is a suite of tools designed to support three main areas of Electoral Management Board (EMB) electoral monitoring as it pertains to social media:

Disinformation: False information spread deliberately to deceive.

Misinformation: Disinformation spread innocently (without an intent to deceive.)

Trust issues: Attempts to erode voter trust in the electoral process and democracy.

Operational issues: Problems related to practical aspects of the voting process.

Political financing issues: This can be divided into two main categories:

- Bypassing of electoral financial spending limits.

- Under-reporting advertising expenditure.

Online voter discourse occurs in waves. It generally peaks in the event of any significant political incidents, and around election milestones such as:

- Voter registration (related questions and concerns)

- Closing day for candidate nominations (discussion around candidates and significant events, if any)

- Political campaign (party and candidate activity)

- Mailing of ballots (issues and concerns about the process)

- Election period:

- Advance polling, including availability of ballots, delays, etc.

- Election day, including availability of ballots, delays, polling station location and hours, power outages, concerns around EMB staff, etc.

- Announcement of results (usually the height of social media discourse)

In providing monitoring of online electoral discourse, KI Social analyses posts originating on Facebook and Instagram pages, Twitter, Reddit, Tumblr, Vimeo, YouTube (including comments), blogs, forums, and online news sources (including comments). The platform provides real-time monitoring, sentiment and emotion analysis, and geo-location mapping.

Using AI analytics and classification mining, KI Social can identify disinformation and misinformation sources, discourses, content, and frequency of posting. The platform maps relationships between various disinformation sources, and within content.

How Disinformation Impacts Elections, and Voters

Disinformation undermines democracy. That’s its purpose. Usually extreme in nature, fake news polarizes people, creating or exacerbating social and political divisions, and breeding cynicism and political disengagement. Even the reporting of disinformation campaigns (Russian electoral interference, for example) adds to the destabilization, making people wary of what to believe.

“Over the past five years, there has been an upward trend in the amount of cyber threat activity against democratic processes globally…. Against elections, adversaries use cyber capabilities to suppress voter turnout …”[2]

Disinformation campaigns often focus on election processes. The aim is to lower voter turnout, by preventing people from voting, or simply by making them less inclined to do so. This can play out in different ways.

Below, I list examples of some of the topics of disinformation, taken from posts from different countries, that can be found in the social media universe during an election period.

Making it harder for people to physically vote

False information gets spread about the location of a polling station, or its hours of operation, or power outages on site causing long line-ups. Fake news like this creates confusion, making people less likely to vote.

Undermining voter trust in the EMB

Findings included:

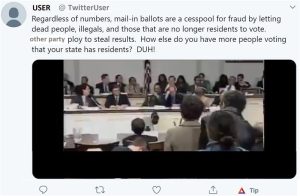

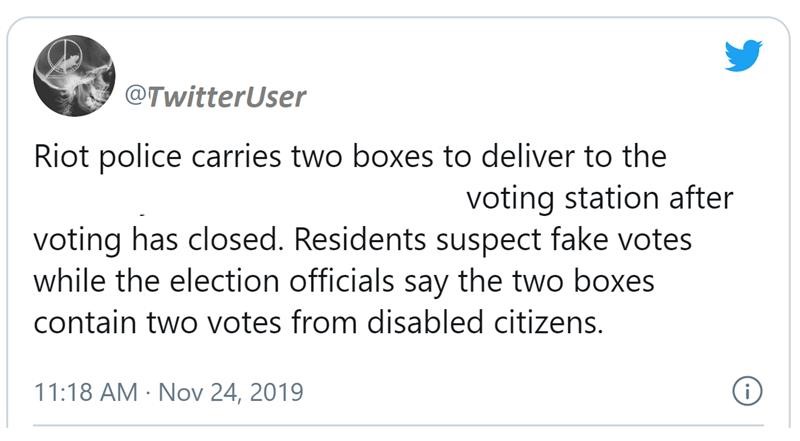

- Instances of people asserting that the ballot system was fallible: claiming, for example, that too many ballots were issued; that people were voting under the names of dead persons; or that they had received two ballots (e.g., under a maiden name and a married name), and would be voting twice; all of which reflect poorly on the EMB.[3]

- Claims that the ballot system is rigged.

- Posts criticizing the government for allowing illegal immigrants, foreign workers, and landed immigrants to vote.

This was fake news – no-one can vote in a federal election in Canada unless they have become a citizen. Other posts criticized the government for allowing prisoners to vote, even though this is a well-established right under the Canadian Charter of Rights and Freedoms. Both these types of posts foster a sense of disenchantment with “the system.”

- Impersonation of the EMB, giving false information under a fake EMB account.

Other posts:

- Claimed that the EMB was politically biased.

- Made claims of Government interference with the EMB.

- Asserted that the EMB doesn’t check the ID of veiled women, which is false.

Undermining voter trust in the electoral process generally

Findings included:

- Posts specifically aimed at discouraging youth from voting, saying that voting changes nothing and is a waste of time.

- Other posts stated that elections are rigged and so there is no point in voting.

- Some posts gave the impression that the voting process is difficult and/or time-consuming: this disinclines immigrant voters for whom the official language/s are not their first language, disabled voters, the elderly, and even those who are very busy from voting.

- Claims of Russian electoral interference added to the confusion.

Polarizing the electorate

- Some posts claimed that certain demographic groups are less averse to crime, and that certain groups vote a given way.

- Some posts spread lies about a particular political party’s platform. For example, ads were placed in a Canadian Chinese-language newspaper, allegedly by one political party, falsely asserting that another political party wanted to legalize hard drugs.

- Instances of candidate impersonation: a fake account was opened in a candidate’s name, and false information was posted.

Other sources of disinformation include:

- Fake news articles about elections appearing in media outlets in foreign countries.

Identifying Disinformation, and Those Who Disseminate It

In monitoring elections, the social media analyst is confronted with an enormous amount of data. The key to accessing and interpreting that data is the keywords and queries the analyst chooses to use. To be effective, these must be shaped by a close understanding of the political context. This process is “highly selective,” notes Democracy Reporting International. “It is not possible to have a comprehensive view of what happens on all social media in an election. Making the right choices of what to look for is one of the main challenges of social media monitoring.”[4]

To help circumvent this challenge, the KI Social platform:

- Detects disinformation via access to firehose global data from a wide range of sources.

- Utilizes AI to detect and measure post sentiment and emotion.

When analysts track disinformation, they usually have preconceived ideas of what it will look like. EMBs are very familiar with mainstream media, and how to track potential disinformation within it (false claims by politicians, for example). Traditional manual modes of monitoring rely on this history of prior examples, and require that human monitors read and analyze every single post to decide whether it’s disinformation.

Some EMBs expand their capabilities by leveraging automated tools. However, standard automated data queries are still based on prior examples and thus are error prone.

A third way of tracking disinformation is via AI-based tools. Such tools avoid these pitfalls by allowing the analyst to track unprecedented volumes of data, as well as its location and context and the sentiment being expressed. Negative emotion is key, as it is the main determinant for disinformation.

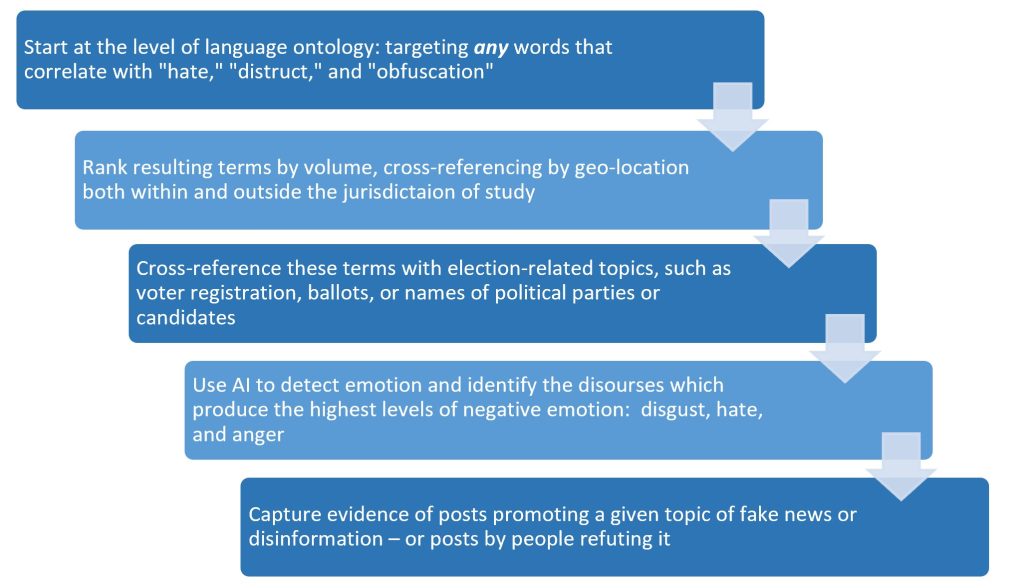

The diagram below illustrates how KI Social’s methodology can be used by EMBs to track disinformation.

Removing unwanted data is conducted at every stage of the process (for example, if analysts are studying an election in France, and there are elections in Ivory Coast at the same time, the Ivory Coast data will need to be filtered out of the results).

Disinformation can be expressed as an algorithm:

Disinformation = (hate OR distrust OR obfuscation) x volume

Election context x (anger OR disgust OR sadness OR fear)

Animated by these queries, KI Social provides the ability to answer the following questions:

- Who? Individuals, and the connections between them

- What? What they’re saying (text, images, emojis, logos)

- When? What time of day? How does this relate to election-related events?

- Where? Which platform/s? From what geographical location?

- Why?

- What other conversations are they involved in?

- What is the context of individuals’ discourse, and who else is involved?

Part of a 5-part series on

Monitoring Online Discourse During An Election:

PART TWO: Identifying Disinformation

PART THREE: Managing Operational issues

PART FOUR: KI Design National Election Social Media Monitoring Playbook

PART FIVE: Monitoring Political Financing Issues

[1] This article reflects the views of KI Design, and not those of Elections Canada. The full report on how Elections Canada uses social media monitoring tools, including those created by KI Design, in the 2019 federal election can be found here: Office of the Chief Electoral Officer of Canada, Report on the 43rd General Election of October 21, 2019: https://www.elections.ca/res/rep/off/sta_ge43/stat_ge43_e.pdf.

[2] Communications Security Establishment, Cyber Threats to Canada’s Democratic Process, Government of Canada 2017, page 5.

[3] All the posts below are in the public domain; nevertheless, we have removed the identity of the poster in the screenshots we provide.

[4] Democracy Reporting International, “Discussion Paper: Social Media Monitoring in Elections,” December 2017, page 2; online at: https://democracy-reporting.org/wp-content/uploads/2018/02/Social-Media-Monitoring-in-Elections.pdf